Are you using technology to scale customer insights?

Technology in Customer Experience programs enables teams to drive CX at scale, empowering understanding and – more importantly – taking action on insights. With the abundance of CFM (Customer Feedback Management) and Voice of the Customer tools in the market enabling us to collect feedback at scale, there is tremendous focus on KPIs and metrics. For a list of such tools, check out Gartner’s application reviews here.

But that’s not what Experience Management is about.

XM is about understanding the why behind the scores

Analyzing and pulling insights from open-ended feedback requires a lengthy process of combing through responses, categorizing and grouping like-comments based on what the customer meant in their remark, and flagging the verbatim to quantify the feedback. This takes an enormous amount of time, effort, and money. To grow this practice, it’s vital that AI is implemented. This is especially important as companies grow their portfolio of products, further stretching the scope of CX teams who have a limited bandwidth of what they can do.

So how do you do this?

In a world full of technology, some human intervention can go a long way. Think of it as “training the tech” – no different than training a new hire. Many systems have text analysis AI in place, but it requires some massaging, so the AI learns proper coding of comments to truly turn feedback into actionable insights. This can take several months but is well worth the effort to teach the machine what your customers are saying.

The research or Voice of the Customer team should drive this and work with key stakeholders throughout. This is an investment in time because it’s imperative that stakeholders across the enterprise are pulled in for collaboration to ensure that each comment used in this exercise is properly coded to account for meaning, the right category, and flagged with the appropriate keywords. It’s vital to have a team of people work on this together, along with the researchers who understand how to flag and bucket comments and are responsible for initiating and executing on this.

It starts with a manual pull of a large number of verbatims. And a manual “bucketing” of comments based on what customers mean when they say something. Humans have the ability to distinguish a comment like “My bill isn’t there” vs. “I can’t find where my bill is on the site”, but AI needs to learn that difference.

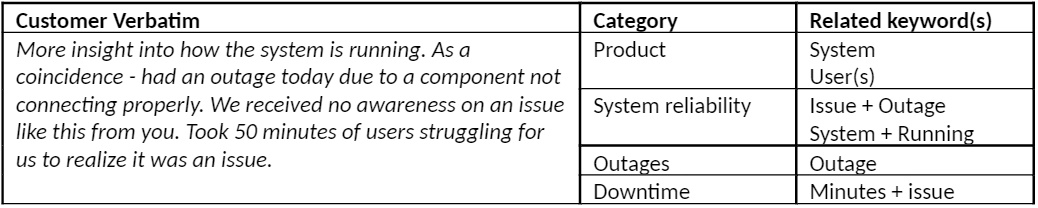

Here’s an example:

I recently completed this exercise for a customer portal feedback program. As a team of 4 (researcher, product owners, and system admin), we spent approximately three months sifting through 1,000 verbatim comments. We flagged categories and identified keywords, re-checked our work, and constantly tested the system by coding directly into the VoC tool. Each iteration of in-system coding allowed us to double and triple-check that each verbatim is flagged properly.

The end result

Once we were satisfied that the AI was properly coding customer comments, we let the tech do the work for us and categorize verbatims on its own.

The results were remarkable.

- It was a timesaver. This exercise completely cut out the time that our stakeholders were taking to categorize comments. Hours per week manually.

- The AI quantified responses within the feedback tool. Not only could we visually see the magnitude of each comment category, but we could also easily see where they stood in relation to one another. This also allowed us to trend comments and be on alert for any spikes, like “404 error code when changing an address”. And most importantly, take action and address them quickly for immediate impact on our customers.

- More specific categories meant more actionable feedback. We went from generic buckets like “service delivery” to specifics like “Unclear communication on delivery timeframe,” enabling teams to better understand where opportunities existed and taking action on improvement.

Technology has infiltrated our day-to-day lives in an unimaginable way. But sometimes, to make it work best, it requires some human intervention. For example, we have to teach Face ID how we look with and without glasses and from different angles. CX tech is no exception.

A small investment of time to get it right yields results that will help turn feedback into actionable insights. And that’s the whole purpose of CX – not to only capture scores, but rather to understand what’s going on so teams can make changes based on what they learn from that feedback! Taking the time and energy to teach text analysis technology will not only give you better analytical results, but it will enable the tech to work for you.

-

Megan Germann is a Certified Customer Experience (CCXP) and Experience Management (XM) professional with nearly 20 years of experience designing and conducting primary and secondary market/customer research, analyzing data, and bringing key insights to life through storytelling. She has worked on both the consulting side and client side, serving both B2B and B2C facets of business. Building on her survey and feedback background, she has been instrumental in building VoC and Digital Experience programs at several tech companies. She has also co-authored the book "Customer Experience", writing the chapter "Building a Best-in-Class Voice of the Customer Program". She has also been recognized as a Global CX Thought Leader in 2020 and 2021.

Currently an Insights Manager in the Product Design space at SAP, she is building a Voice of the User program, triangulating insights from PX, UX, Product, and market research to enable product teams to take action.