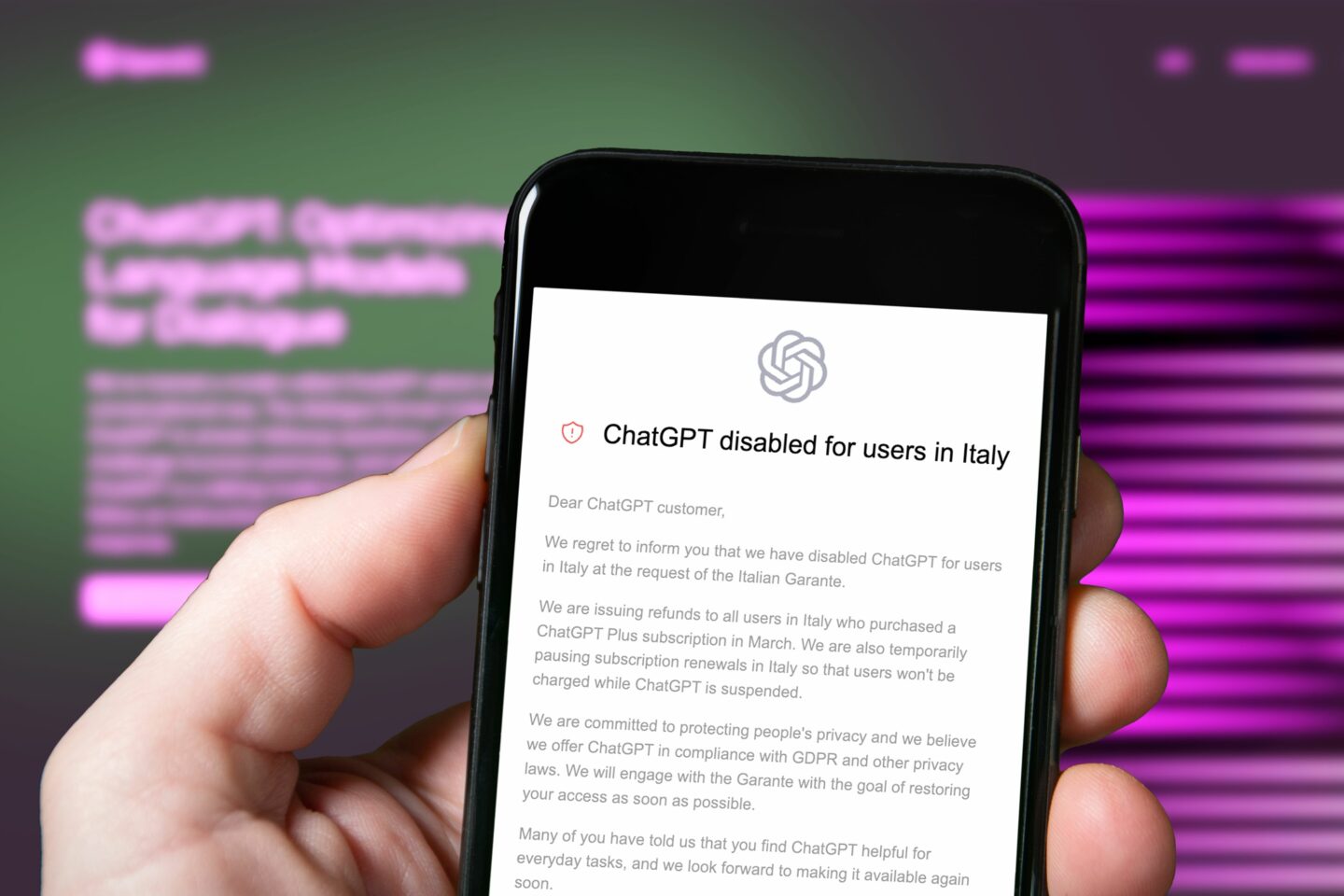

OpenAI is facing scrutiny from Italy's data protection authority, Garante, which alleges that the AI chatbot application ChatGPT violates EU data protection rules.

Garante, known for its proactive stance on AI platform compliance, had previously banned ChatGPT over alleged privacy breaches, but the service was reinstated after OpenAI addressed certain issues. The authority has informed OpenAI that its preliminary findings indicate potential data privacy violations without providing specific details.

Not too long ago, ChatGPT was banned from court, with requirements mandating attorneys appearing in his court to submit a certificate affirming that AI language models like ChatGPT generated no part of their filing.

According to the Italian watchdog, OpenAI has 30 days to present its defense against the allegations. Garante's investigation, initiated last year, has raised concerns about ChatGPT's compliance with the EU's General Data Protection Regulation (GDPR). The issues include a lack of a suitable legal basis for collecting and processing personal data for training ChatGPT's algorithms, as well as the AI tool's tendency to produce inaccurate information about individuals (referred to as 'hallucination') and concerns about child safety.

OpenAI, backed by Microsoft, asserts that its practices align with EU privacy laws and actively works to minimize personal data in training its systems. The company plans to collaborate with Garante during the investigation.

The GDPR, introduced in 2018, imposes fines of up to 4% of a company's global turnover for violations. Garante's draft findings have not been disclosed, but confirmed breaches could result in fines of up to €20 million.

Can OpenAI justify its data within the EU?

The legal basis for processing personal data for training AI models is a crucial issue. OpenAI, having developed ChatGPT using publicly scraped data containing personal information, faces challenges in justifying its data processing within the EU. The GDPR offers six possible legal bases, with OpenAI previously relying on either consent or legitimate interests. However, seeking consent from millions of web users seems impractical, and the validity of legitimate interests is in question.

Garante's notification of violation awaits OpenAI's response, and the investigation involves collaboration with a European task force of national privacy watchdogs. OpenAI has sought to establish a physical base in Ireland to benefit from the one-stop-shop mechanism for GDPR compliance potentially, but the ongoing investigations in Italy and Poland persist.

While a task force within the European Data Protection Board aims to harmonize outcomes across ChatGPT investigations, individual authorities remain independent, and conclusions may vary. The outcome of this latest chapter in OpenAI's regulatory challenges will unfold as the company navigates the complex landscape of AI and data protection regulations.